On 30 October, Prof. Naomi Tadmor led a workshop at the University of Sheffield, hosted by the Sheffield Centre for Early Modern Studies. In what follows, I briefly summarise Tadmor’s presentation, and then provide some reflections related to my own work, and to Linguistic DNA.

On 30 October, Prof. Naomi Tadmor led a workshop at the University of Sheffield, hosted by the Sheffield Centre for Early Modern Studies. In what follows, I briefly summarise Tadmor’s presentation, and then provide some reflections related to my own work, and to Linguistic DNA.

The key concluding points that Tadmor forwarded are, I think, important for any work with historical texts, and thus also crucial to historical research:

- Understanding historical language (including word meaning) is necessary for understanding historical texts

- To understand historical language we must analyse it in context.

- Analysing historical language in context requires close reading.

Whether we identify as historians, linguists, corpus linguists, literary scholars, or otherwise, we would do well to keep these points in mind.

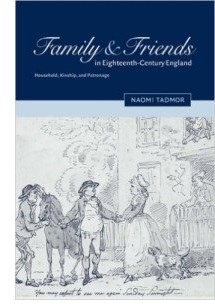

Tadmor’s take on historical keywords

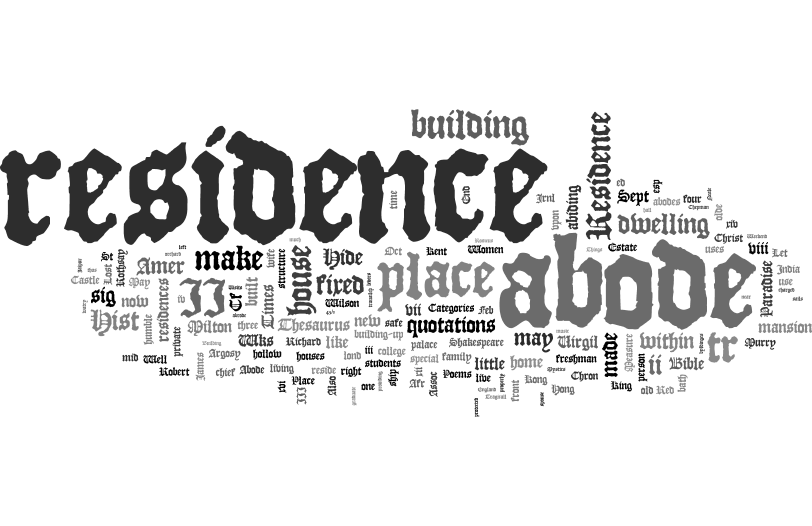

Tadmor’s specific arguments in the master class focused on kinship terms. In Early Modern English (EModE), there was a broad array of referents for kinship terms such as brother, mother, father, sister, and associated terms like family and friend, which are not likely to be intuitive to a speaker of Present Day English (PDE). Evidence shows, for example, that family often referred to all of the individuals living in a household, including servants, to the possible exclusion of biological relations living outside of the household. The paper Tadmor asked us to read in advance (first published in 1996), supplemented with other examples at the masterclass, provides extensive illustrations of the nuance of family and other kinship terms.

In EModE, there was also a narrow range of semantic or pragmatic implications related to kinship terms: these meanings generally involved social expectations, social networks, or social capital. So, father could refer to ‘biological father’ or ‘father-in-law’ (or even ‘King’), and implied a relationship of social expectation (rather than, for example, a relationship of affection or intimacy, as might be implied in PDE).

By identifying both the array of referents and the implications or senses conveyed by these kinship terms, Tadmor provides a thorough illustration of the terms’ lexical semantics. We can see this method as being motivated by historical questions (about the nature of Early Modern relationships); driven in its first stage by lexicology (insofar as it begins by asking about words, their referents, and senses); and then, in a final stage, employing lexicological knowledge to analyse texts and further address the initial historical questions. Tadmor avoids circularity by using one data set (in her 1996 paper) to identify a hypothesis regarding lexical semantics, and another data set to test her hypothesis. What do these observations about lexical semantics tell us about history? As Tadmor notes, it is by identifying these meanings that we can begin to understand categories of social actions and relationships, as well as motivations for those actions and relationships. Perhaps more fundamentally, it is only by understanding semantics in historical texts, that we can begin to understand the texts meaningfully.

A Corpus Linguist’s take on Tadmor’s methods

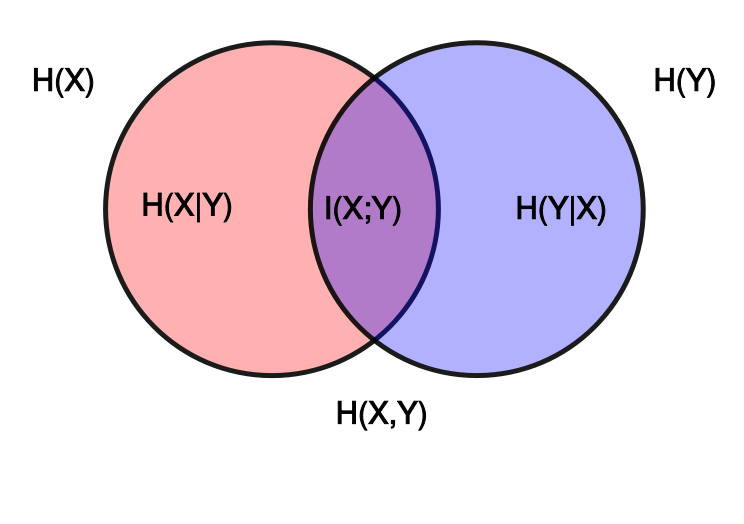

Reflecting on Tadmor’s talk, I’m reminded of the utility of the terms semasiology and onomasiology. In semantic research, semasiology is an approach which examines a term as an object of inquiry, and proceeds to identify the meanings of that word. Onomasiology is an approach which begins with a meaning, and then identifies the various terms for expressing it. Tadmor’s method is largely semasiological, insofar as it looks at the meanings of the term family and other kinship terms. This approach begins in a relatively straightforward way—find all of the instances of the word (or lemma), and you can then identify its various senses. The next step is more difficult: how do you distinguish its senses? In linguistics, a range of methods is available, with varying degrees of rigour and reproducibility, and it is important that these methods be outlined clearly. Tadmor’s study is also onomasiological, as she compares the different ways (often within a single text) of referring to a given member of the household family. This approach is less straightforward: how do you identify each time a member of the family is referred to? Again, a range of methods is available, each with its own advantages and disadvantages. A clear statement and justification of the choice of method renders any study more rigorous. In my experience, the systematicity of thinking in terms of onomasiology and semasiology is useful in developing a systematic and rigorous study.

Semasiology and onomasiology allow us to distinguish types of study and approaches to meaning, which can in turn help render our methods more explicit and clear. Similarly, distinguishing editorially between a word (e.g. family) and a meaning (e.g. ‘family’) is useful for clarity. Indeed, thinking methodologically in terms of semasiology and onomasiology encourages clarity of expression editorially regarding terms and meanings. In Tadmor’s 1996 paper, double quotes (e.g. “family”) are used to refer to either the word family or the meaning ‘family’ at various points. At times, such a paper could be rendered more clear, it seems to me, by adopting consistent editorial conventions like those used in linguistics (e.g. quotes or all caps for meanings, italics for terms). The distinction between a term and a meaning is by nature not always clear or certain: that difficulty is all the more reason for journals to adhere to rigorously defined editorial conventions.

From the distinction between terms and concepts, we can move to the distinction between senses and referents. It is important to be explicit both about changes in referent and changes in sense, when discussing semasiological change. For example, as historians and linguists, we must be sure that when we identify changes in a word’s referents (e.g. father referring to ‘father-in-law’), we also identify whether there are changes in its sense (e.g. ‘a relationship of social expectation’ or ‘a relationship of affection and intimacy’). When Thomas Turner refers to his father-in-law as father, he seems to be using the term, as identified by Tadmor, in its Early Modern sense implying ‘a relationship of social expectation’ rather than in the possible PDE sense implying ‘a relationship of affection and intimacy’. The terms referent and sense allow for this distinction, and are useful in practice when conducting this kind of semantic analysis.

Of course, if a term becomes polysemous, it can be applied to a new range of referents, with a new sense, or even with new implicatures or connotations. For example, we can imagine (perhaps counterfactually) a historical development in which family might have come to refer to cohabitants who were not blood relations. At the same time, in referring to those cohabitants who were not blood relations, family might have ceased to imply any kind of social expectation, social network, or social capital. That is, it’s possible for both the referent and the sense to change. In this case, as Tadmor has shown, that doesn’t seem to be what’s happened, but it’s important to investigate such possible polysemies.

Future possibilities: Corpus linguistics

As a corpus linguist, I’d be interested in investigating Tadmor’s semantic findings via a quantitative onomasiological study, looking more closely at selection probabilities. Such a study could ask research questions like:

- Given that an Early Modern writer is expressing ‘nuclear family’, what is the probability of using term a, b, etc., in various contexts?

- Given that a writer is expressing ‘household-family’, what is the probability of using term a, b, etc., in various contexts?

- Given that a writer is expressing ‘spouse’s father’ or ‘brother’s sister’, etc., what is the probability of using term a, b, etc., in various contexts?

These onomasiological research questions (unlike semasiological ones) allow us to investigate logical probabilities of selection processes. This renders statistical analyses more robust. Changes in probabilities of selection over time are a useful illustration of onomasiological change, which is an essential part of semantic change.

And for Linguistic DNA?

For Linguistic DNA, I see (at least) two major questions related to Tadmor’s work:

- Can automated distributional analysis uncover the types of phenomena that Tadmor has uncovered for family?

- What is a concept for Tadmor, and how can her work inform our notion of a concept?

In response to the first question, it is certainly possible that distributional analysis can reflect changing referents (such as ‘father-in-law’ referred to as father). Hypothetically, the distribution of father with a broad array of referents might entail a broad array of lexical co-occurrences. In practice, however, this might be very, very difficult to discern. Hence Tadmor’s call for close reading. It is perhaps more likely that the sense (as opposed to referent) of father as ‘a relationship involving social expectations’ might be reflected in co-occurrence data: hypothetically, father might co-occur with words related to social expectation and obligation. We have evidence that semantically related words tend to constitute only about 30% of significant co-occurrences. Optimistically, it might be that the remaining 70% of words do suggest semantic relationships, if we know how to interpret them—in this case, maybe some co-occurrences with family would suggest the referents or implications discussed here. Pessimistically, it might be that if only 30% of co-occurring words are semantically related, then there would be an even lower probability of finding co-occurring words that reveal such fine semantic or pragmatic nuances as these. Thanks to Tadmor’s work, Linguistic DNA might be able to use family as a test case for what can be revealed by distributional analysis.

What is a concept? Tadmor (1996) doesn’t define concept, and sometimes switches quickly, for example, between discussing the concept ‘family’ and the word family, which can be tricky to follow. At times, concept for Tadmor seems to be similar to definition—a gloss for a term. At other times, concept seems to be broader, suggesting something perhaps with psycholinguistic reality, a sort of notion or idea held in the mind that might relate to an array of terms. Or, concept seems to relate to discourses, to shared social understandings that are shaped by language use. Linguistic DNA is paying close attention to operationalising and/or defining concept in its approach to conceptual and semantic change in EModE. Tadmor’s work points in the same direction that interests us, and the vagueness of concept which Tadmor engages with is vagueness that we are engaging with as well.

In May, Susan, Iona and Mike travelled to Utrecht, at the invitation of Joris van Eijnatten and Jaap Verheul. Together with colleagues from Sheffield’s History Department, we presented the different strands of Digital Humanities work ongoing at Sheffield. We learned much from our exchanges with Utrecht’s

In May, Susan, Iona and Mike travelled to Utrecht, at the invitation of Joris van Eijnatten and Jaap Verheul. Together with colleagues from Sheffield’s History Department, we presented the different strands of Digital Humanities work ongoing at Sheffield. We learned much from our exchanges with Utrecht’s