Introduction

When discussing proximity data and distributional methods in corpus semantics, it is common for linguists to refer to Firth’s famous “dictum”, ‘you shall know a word by the company it keeps!’ In this post, I look a bit more closely at the theoretical traditions from which this approach to semantics in contexts of use has arisen, and the theoretical links between this approach and other current work in linguistics. (For a synopsis of proximity data and distributional methods, see previous posts here, here, and here.)

Language as Use

Proximity data and distributional evidence can only be observed in records of language use, like corpora. The idea of investigating language in use reflects an ontology of language—the idea that language is language in use. If that basic definition is accepted, then the linguist’s job is to investigate language in use, and corpora constitute an excellent source of concrete evidence for language in use in specific contexts. This prospect is central to perhaps the greatest rift in 20th century linguistics: between, on the one hand, generative linguists who argued against evidence of use (as a distraction from the mental system of language), and, on the other hand, most other linguists, including those in pragmatics, sociolinguistics, Cognitive Linguistics, and corpus linguistics, who see language in use as the central object of study.

Dirk Geeraerts, in Theories of Lexical Semantics, provides a useful, concise summary of the theoretical background to distributional semantics using corpora. Explicitly, a valuation of language in use can be traced through the work of linguistic anthropologist Bronislaw Malinowsky, who argued in the 1930s that language should only be investigated, and could only be understood, in contexts of use. Malinowsky was an influence on Firth, who in turn influenced the next generation of British corpus linguists, including Michael Halliday and John Sinclair. Firth himself was already arguing in the 1930s that ‘the complete meaning of a word is always contextual, and no study of meaning apart from context can be taken seriously’. Just a bit later, Wittgenstein famously asserted in Philosophical Investigations that linguistic meaning is inseparable from use, an assertion quoted by Firth, and echoed by the the philosopher of language John Austin, who was seminal in the development of linguistic pragmatics. Austin approached language as speech acts, instances of use in complex, real-world contexts, that could only be understood as such. The focus on language in use can subsequently be seen throughout later 20th-century developments in the fields of pragmatics and corpus research, as well as in sociolinguistics. Thus, some of the early theoretical work that facilitated the rise of corpus linguistics, and distributional methods, can first be seen in the spheres of philosophy and even anthropology.

Meaning as Contingent, Meaning as Encyclopedic

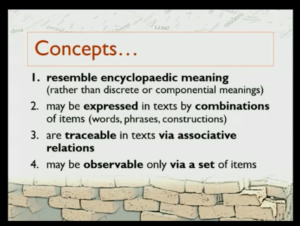

In order to argue that lexical co-occurrence in use is a source of information about meaning, we must also accept a particular definition of meaning. Traditionally, it was argued that there is a neat distinction between constant meaning and contingent meaning.1 Constant meaning was viewed as the meaning related to the word itself, while contingent meaning was viewed as not related to the word itself, but instead related to broader contexts of use, including the surrounding words, the medium of communication, real-world knowledge, connotations, implications, and so on. Contingent meaning was by definition contributed by context; context is exactly what is examined in proximity measures and distributional methods. So distributional methods are today generally employed to investigate semantics, but they are in fact used to investigate an element of meaning that was often not traditionally considered the central element of semantics, but instead a peripheral element.

In relation to this emphasis on contingent meaning, corpus linguistics has developed alongside the theory of encyclopedic semantics. In encyclopedic semantics, it is argued that there is any dividing line between constant and contingent meaning is arbitrary. Thus, corpus semanticists who use proximity measures and distributional approaches do not often argue that they are investigating contingent meaning. Instead, they may argue that they are investigating semantics, and that semantics in its contemporary (encyclopedic) sense is a much broader thing than in its more traditional sense.

Distributional methods therefore represent not only an ontology of language as use, but also an ontology of semantics as including what was traditionally known as contingent meaning.

To be continued…

Having discussed the theoretical and philosophical underpinnings of distributional methods here, I will go on to discuss the practical background of these methods in the next blog post.

Pingback: A Theoretical Background to Distributional Methods (pt. 2 of 2) | Linguistic DNA