This blog post completes our series of three extracts from Susan Fitzmaurice’s paper on “Concepts and Conceptual Change in Linguistic DNA”. (See parts 1 and 2.)

The supra-lexical approach to the process of concept recognition that I’ve described depends upon an encyclopaedic perspective on semantics (e.g. cf. Geeraerts, 2010: 222-3). This is fitting as ‘encyclopaedic semantics is an implicit precursor to or foundation of most distributional semantics or collocation studies’ (Mehl, p.c.). However, such studies do not typically pause to model or theorise before conducting analysis of concepts and semantics as expressed lexically. In other words, semasiological (and onomasiological) studies work on the premise of ready-made or at least ready lexicalised concepts, and proceed from there. This means that although they depend upon the prior application of encyclopaedic semantics, they themselves do not need to model or theorise this semantics because it belongs to the cultural messiness that yields the lexical expressions that they then proceed to analyse.

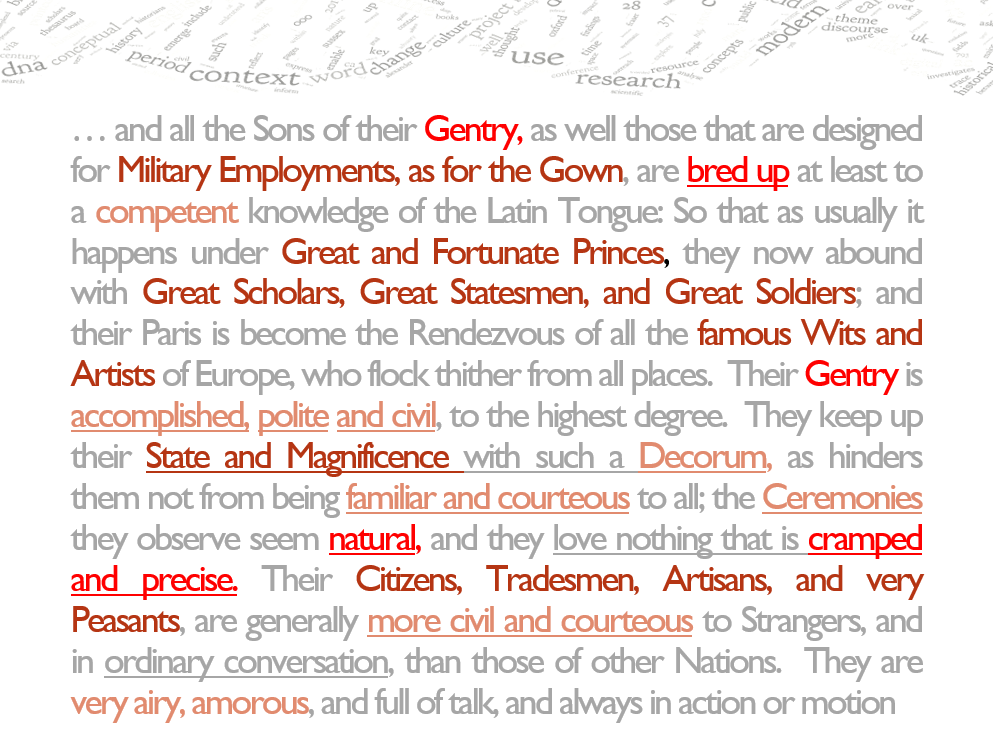

For LDNA, concepts are not discrete or componential lexical semantic meanings; neither are they abstract or ideal. Instead, they consist of associations of lexical/phrasal/constructional semantic and pragmatic meanings in use.

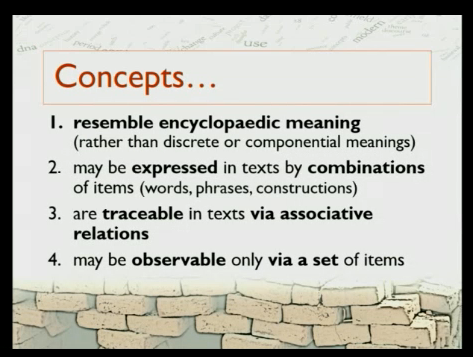

This encyclopaedic perspective suggests the following operationalisation of a concept for LDNA:

- Concepts resemble encyclopaedic meanings (which are temporally and culturally situated chunks of knowledge about the world expressed in a distributed way) rather than discrete or componential meanings. [This coincides with non-modular theories of mind, which adopt a psychological approach to concepts.]

- Concepts can be expressed in texts by (typically a combination of) words, phrases, constructions, or even by implicatures or invited inferences (and possibly by textual absences).

- Concepts are traceable in texts primarily via significant syntagmatic (associative) relations (of words/phrases/constructions/meanings) and secondarily via significant paradigmatic (alternate) relations (of words/phrases/constructions/meanings).

- A concept in a given historical moment might not be encapsulated in any observed word, phrase, or construction, but might instead only be observable via a complete set of words, phrases, or constructions in syntagmatic or paradigmatic relation to each other.

It is worth noting however, that concept recognition is particularly difficult (for the automatic processes built into LDNA) because it ordinarily depends upon the level of cultural literacy possessed by a reader. This is a quality which, while we cannot incorporate it as a process, we can take it into account by testing distant reading through close reading.

As well as being encyclopaedic, our approach is also experiential, in that the conceptual structure of early modern discourse is a reflection of the way early modern people experienced the world around them. That discourse presents a particular subjective view of the world with the hierarchical network of preferences which emerges as a network of concepts in discourse. In this way we also assume a perspectival nature of concept organisation.

Concluding remarks: Testing and tracking conceptual change across time and style

All being well, if we succeed in visualising the results of an iterative and developing set of procedures to inspect the data from these large corpora, we hope to be able to discern and locate the emergence of concepts in the universe of early modern English print. A number of questions arise about where and how these will show up.

For instance, following our hypothesis, will we see the cementation of a concept in the persistent co-occurrence in particular contexts of candidate conjuncts (both binomials and alternates), bigrams, and ultimately, ‘keywords’? (e.g. ‘man of business’ → ‘businessman’ in late Modern English newspapers)

And, as part of the notion of context, it is worth considering the role of discourse genre in the emergence of a concept and in conceptual change. For instance, if it is the case that a concept emerges, not as a keyword, but in the form of an association of expressions that functions as a loose paraphrase, is this kind of process more likely to occur in a specific discourse genre than in general discourse? In other words, is it possible that technical or specialist discourses will be the locus of new concepts, concepts which might diffuse gradually into public and more general ones? (e.g. dogma, law, science → newpapers, narrative, etc.)

What we hope to do is to make our approach manifest and our results visual. For instance, the emergence of a concept might be envisaged as clusters of texts rising up on the terrain representing a certain feature. And the reminder that they might not just gradually change over time, rising and falling across the terrain, but there might instead be islands of certain features that appear in distant time periods, disparate genres, sub-genres. All of that can be identified by the computer, but we have to make sense of it as close readers afterwards.

References

Geeraerts, Dirk. 2010. Theories of Lexical Semantics. Oxford: OUP.