This blog post features the second of three extracts from Susan Fitzmaurice’s paper on “Concepts and Conceptual Change in Linguistic DNA”. (See previous post.)

Before tackling the problem of actually defining the content of a concept ‘from below’, we need to imagine ourselves into the position of being able to recognize the emergence of material that is a candidate for being considered a concept. Let’s briefly consider the question of ‘when is a concept’; in other words, how will we recognize something that is relevant, resonant and important in historical, cultural and political terms for our periods of interest?

In a manner that is not trivial, we want our research process to perform the discovery work of an innocent reader, a reader who approaches a universe of discourse without an agenda, but with a will to discover what the text yields up as worthy of notice. This innocent reader is an ideal reader of course; as humans are pattern finders, pattern matchers and meaning makers, it is virtually impossible to imagine a process that is truly ab initio. Indeed, a situation in which the reader is not primed to notice specific features, characteristics or meanings by the cotext or broader context is rare indeed.

The aim is for our processes to imitate the intuitive, intelligent scanning that human readers perform as they survey the universe of discourse in which they are interested (literary and historical documents). We assume that readers gradually begin to notice patterns, perhaps prominent combinations or associations, patterns that appear in juxtaposition in some places and in connection in others (Divjak & Gries, 2012). The key process is the noticing in the text the formation of ideas that gather cohesion and content in linguistic expression. We hypothesize that in the process of noticing, the reader begins to attribute increasing weight to the meanings they locate in the text. One model for this hypothesis is the experience of the foreign language learner who reads a text with her attention drawn to the expressions she recognises and can construe.

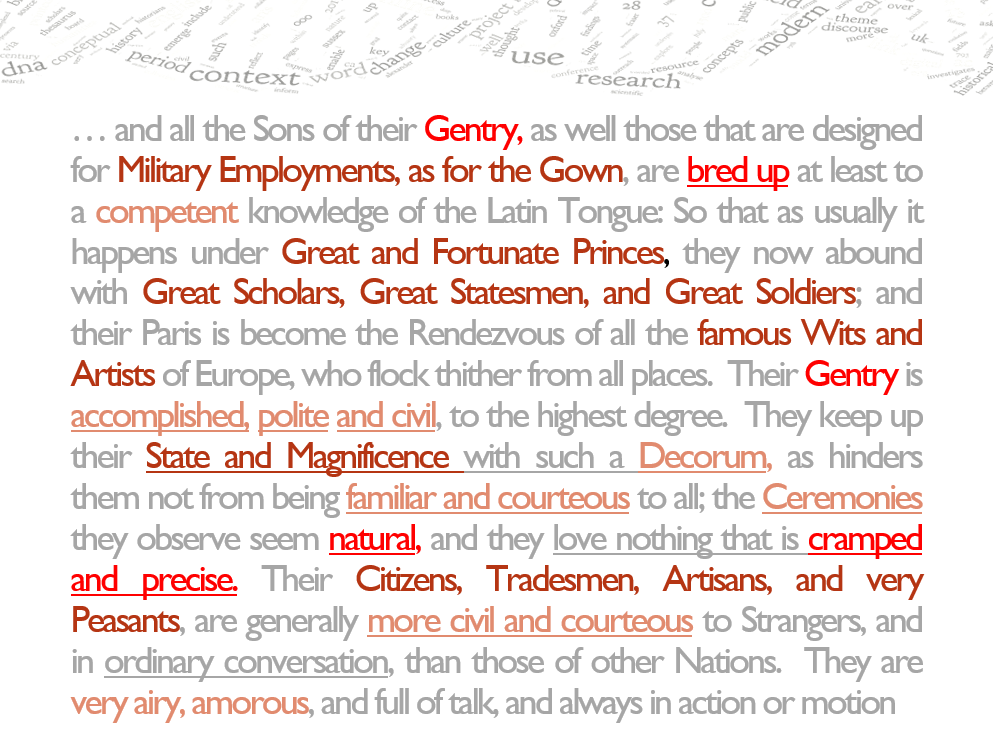

The principal problem posed by our project is therefore to extract from the discourse stuff that we might be able to discern as potential concepts. In other words, we aim to identify a concept from the discourse inwards by inspecting the language instead of defining a concept from its content outward (i.e. starting with a term and discerning its meaning). If we move from the discourse inwards, the meanings that we attribute weight to may be implicit and distributed across a stretch of text, in a text window.

That is, the meanings we notice as relevant might not be encapsulated in individual lexical items or character strings within a simple syntactic frame. This recognition requires that we resist the temptation to treat a word or a character string as coterminous with a concept. Indeed, the more we associate relevance with, say, the frequency of a particular word or character string in a sub-corpus, the less likely we are to be able to look beyond the word as an index of a concept. To remain open and receptive in the process of candidate concept recognition, we need to expand the range of the things we inspect on the one hand and the scope of the context we read on the other.

The linguistic material that will be relevant to the identification of a concept will consist of a combination or set of expressions in association that occur in a concentrated fashion in a stretch of text. Importantly, this material may consist of lexical items, phrases, sentences, and may be conveyed metaphorically as well as literally, and likely pragmatically (by implicature and invited inference) as well as semantically. If the linguistic elaboration (definition, paraphrase, implication) of a concept precedes the lexicalization of a concept, it is reasonable to assume that the appearance of regularly and frequently occurring expressions in degrees of proximity within a window will aid the identification of a concept.

The scope of the context in which a concept appears is likely to be greater than the phrase or sentence that is the context for the keyword that we customarily consider in collocation studies. This context is akin to the modern notion of the paragraph, or, the unit of discourse which conventionally treats a topic or subject with the commentary that makes up the content of the paragraph. The stretch of text relevant for the identification of conceptual material may thus amount to a paragraph, a page, or a short text.

The linguistic structure of a concept has been shown to be built both paradigmatically (via synonymy) and syntagmatically (via lexical associations, syntax, paraphrase). For our purposes, given that the task entails picking up clues to the construction of concepts from the linguistic material in the context, where ‘context’ is defined pretty broadly, paradigmatic relations are less likely to be salient than syntagmatic relations like paraphrase, vagueness and association, perhaps more than predictable relations like antonymy and polysemy.

See the final post in this Manifesto series.

References

Divjak, Dagmar & Gries, Stefan Th. (eds). 2012. Frequency effects in language learning and processing (Vol. 1). Berlin: De Gruyter