In February 2016, Linguistic DNA hosted Dr Kris Heylen as an HRI Visiting Fellow, strengthening our links with KU Leuven’s Quantitative Lexicology and Variational Linguistics research group. This post outlines the scheduled public events.

Next week, the Linguistic DNA project welcomes visiting scholar–and HRI Visiting European Fellow—Dr Kris Heylen of KU Leuven.

About Kris:

Kris is a researcher based in KU Leuven’s Quantitative Lexicology and Variational Linguistics research group. His research focuses on the statistical modelling of lexical semantics and lexical variation, and more specifically the introduction of distributional semantic models into lexicological research. Next to his fundamental research on lexical semantics, he has also a strong interest in exploring the use of quantitative, corpus-based methods in applied linguistic research with projects in legal translation, vocabulary learning and medical terminology.

During his stay in Sheffield, Kris will be working alongside the Linguistic DNA team, playing with some of our data, and sharing his experience of visualizing semantic change across time, as well as talking about future research collaborations with others on campus. There will be several opportunities for others to meet with Kris and hear about his work, including a lecture and workshop (details below). Both events are free to attend.

Lecture: 3 March

On Thursday 3rd March at 5pm, Kris will give an open lecture entitled:

Tracking Conceptual Change:

A Visualization of Diachronic Distributional Semantics

ABSTRACT (Kris writes):

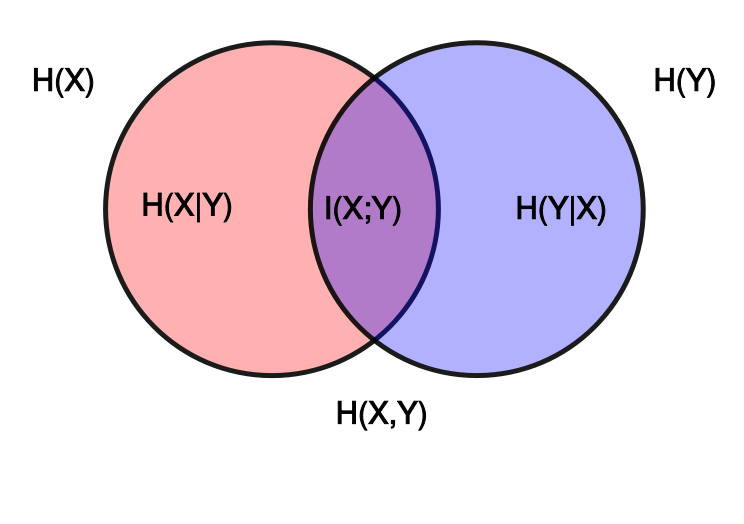

In this talk, I will present an overview of statistical and corpus-based studies of lexical variation and semantic change, carried out at the research group Quantitative Lexicology and Variational Linguistics (QLVL) in recent years. As a starting point, I’ll take the framework developed in Geeraerts et. al. (1994) to describe the interaction between concepts’ variable lexical expression (onomasiology) and lexemes’ variable meaning (semasiology). Next, I will discuss how we adapted distributional semantic models, as originally developed in computational linguistics (see Turney and Pantel 2010 for an overview), to the linguistic analysis of lexical variation and change.

With two case studies, one on the concept of immigrant in Dutch and one on positive evaluative adjectives in English (great, superb, terrific, etc.), I’ll illustrate how we have used visualisation techniques to investigate diachronic change in both the construal and the lexical expression of concepts.

All are welcome to attend this guest lecture which takes place at the Humanities Research Institute (34 Gell Street). It is also possible to come for dinner after the lecture, though places may be limited and those interested are asked to get in touch with Linguistic DNA beforehand (by Tuesday 1st February).

Workshop: 7 March

On Monday 7th March, Kris will run an open workshop on visualizing language, sharing his own experiments with Linguistic DNA data. Participation is open to students and staff, but numbers are limited and advance registration is required. To find out more, please email Linguistic DNA (deadline: 4pm, Friday 4th March). Those at the University of Sheffield can reserve a place at the workshop using Doodle Poll.

Anyone who would like the opportunity to meet with Kris to discuss research collaborations should get in touch with him via Linguistic DNA as soon as possible so that arrangements can be made.