June 2016:

For the past couple of months, our rolling horizon has looked increasingly full of activity. This new blogpost provides a brief update on where we’ve been and where we’re going. We’ll be aiming to give more thorough reports on some of these activities after the events.

Where we’ve been

In May, Susan, Iona and Mike travelled to Utrecht, at the invitation of Joris van Eijnatten and Jaap Verheul. Together with colleagues from Sheffield’s History Department, we presented the different strands of Digital Humanities work ongoing at Sheffield. We learned much from our exchanges with Utrecht’s AsymEnc and Translantis research programs, and enjoyed shared intellectual probing of visualisations of change across time. We look forward to continued engagement with each others’ work.

In May, Susan, Iona and Mike travelled to Utrecht, at the invitation of Joris van Eijnatten and Jaap Verheul. Together with colleagues from Sheffield’s History Department, we presented the different strands of Digital Humanities work ongoing at Sheffield. We learned much from our exchanges with Utrecht’s AsymEnc and Translantis research programs, and enjoyed shared intellectual probing of visualisations of change across time. We look forward to continued engagement with each others’ work.

A week later, Seth and Justyna participated in This&THATCamp at the University of Sussex (pictured), with LDNA emerging second in a popular poll of topics for discussion at this un-conference-style event. Productive conversations across the two days covered data visualisation, data manipulation, text analytics, digital humanities and even data sonification. We hope to hear more from Julie Weeds and others when the LDNA team return to Brighton in September.

Next week, we’ll be calling on colleagues at the HRI to talk us through their experience visualising complex humanities data. Richard Ward (Digital Panopticon) and Dirk Rohman (Migration of Faith) have agreed to walk us through their decision-making processes, and talk through the role of different visualisations in exploring, analysing, and explaining current findings.

Where we’re going

The LDNA team are also gearing up for a summer of presentations:

- Justyna Robinson will be representing LDNA at Sociolinguistics Symposium (Murcia, 15-18 June), as well as sharing the latest analysis from her longitudinal study of semantic variation focused on polysemous adjectives in South Yorkshire speech. Catch LDNA in the general poster session on Friday (17th), and Justyna’s paper at 3pm on Thursday. #SS21

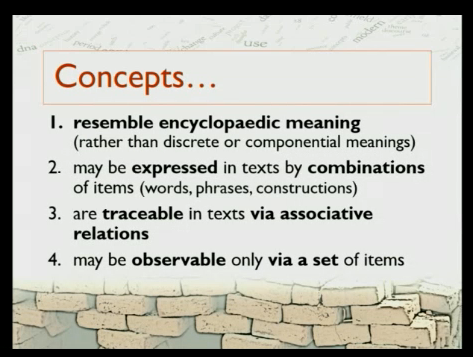

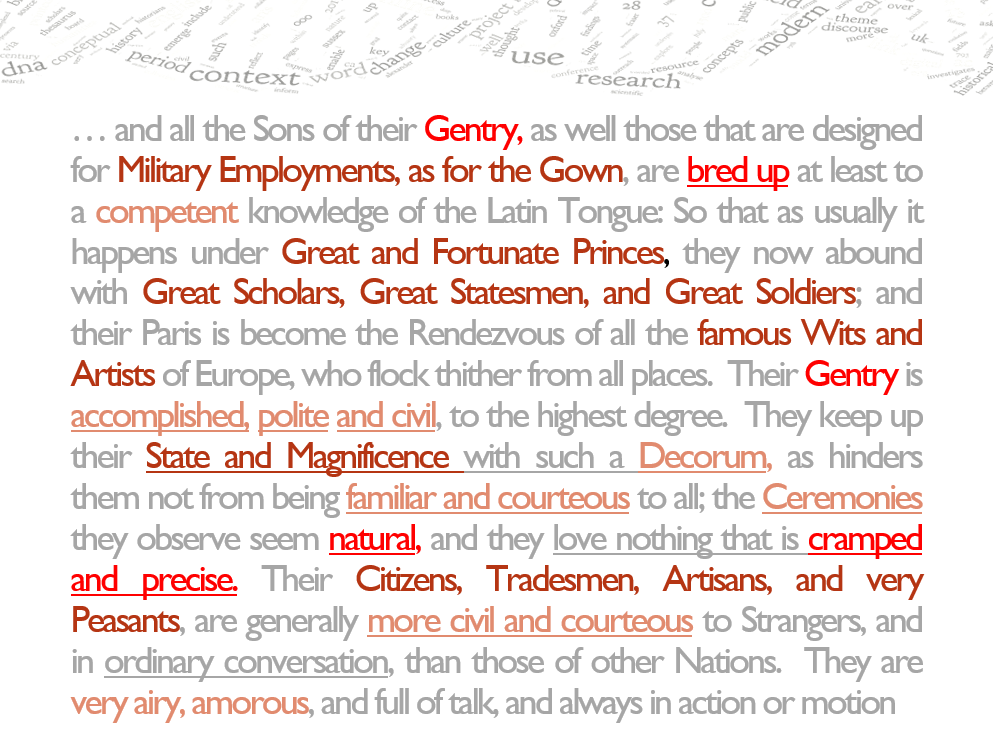

- Susan Fitzmaurice is in Saarland, as first guest speaker at the Historical Corpus Linguistics event hosted by the IDeaL research centre, also on Thursday (16th June) at 2:15pm. Her paper is subtitled “Discursive semantics and the quest for the automatic identification of concepts and conceptual change in English 1500-1800”. #IDeaL

- In July, the Glasgow LDNA team are Krakow-bound for DH2016 (11-16 July). The LDNA poster, part of the Semantic Interpretations group, is currently allocated to Booth 58 during the Wednesday evening poster session. Draft programme.

- Later in July, Iona heads to SHARP 2016 in Paris (18-22). This year, the bi-lingual Society are focusing on “Languages of the Book”, with Iona’s contribution drawing on her doctoral research (subtitle: European Borrowings in 16th and 17th Century English Translations of “the Book of Books”) and giving attention to the role of other languages in concept formation in early modern English (a special concern for LDNA’s work with EEBO-TCP).

- In August, Iona is one of several Sheffield early modernists bound for the Sixteenth Century Society Conference in Bruges. In addition to a paper in panel 241, “The Vagaries of Translation in the Early Modern World” (Saturday 20th, 10:30am), Iona will also be hosting a unique LDNA poster session at the book exhibit. (Details to follow)

- The following week (22-26 August), Seth, Justyna and Susan will be at ICEHL 19 in Essen. Seth and Susan will be talking LDNA semantics from 2pm on Tuesday 23rd.

Back in the UK, on 5 September, LDNA (and the University of Sussex) host our second methodological workshop, focused on data visualisation and linguistic change. Invitations to a select group of speakers have gone out, and we’re looking forward to a hands-on workshop using project data. Members of our network who would like to participate are invited to get in touch.

And back in Sheffield, LDNA is playing a key role in the 2016 Digital Humanities Congress, 8-10 September, hosting two panel sessions dedicated to textual analytics. Our co-speakers include contacts from Varieng and CRASSH. Early bird registration ends 30th June.