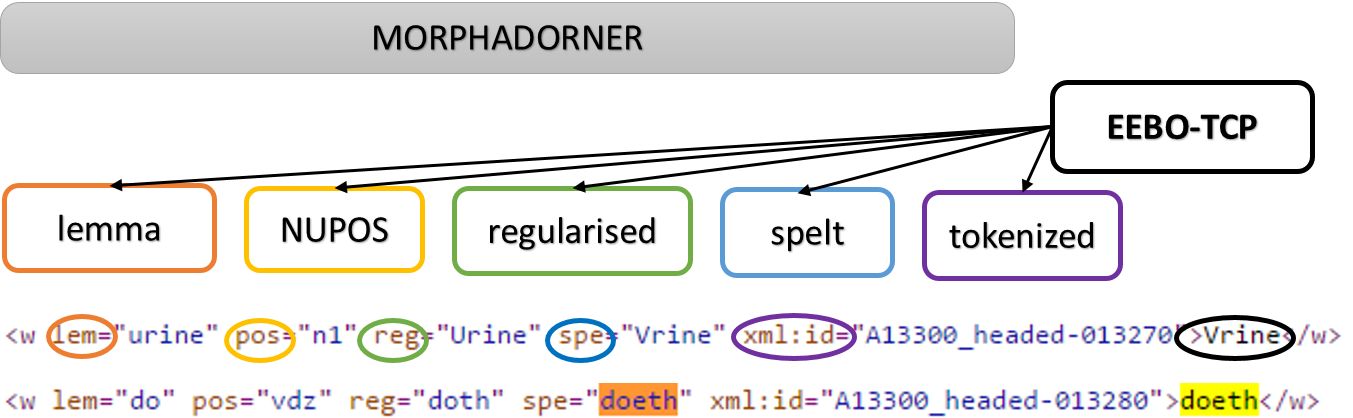

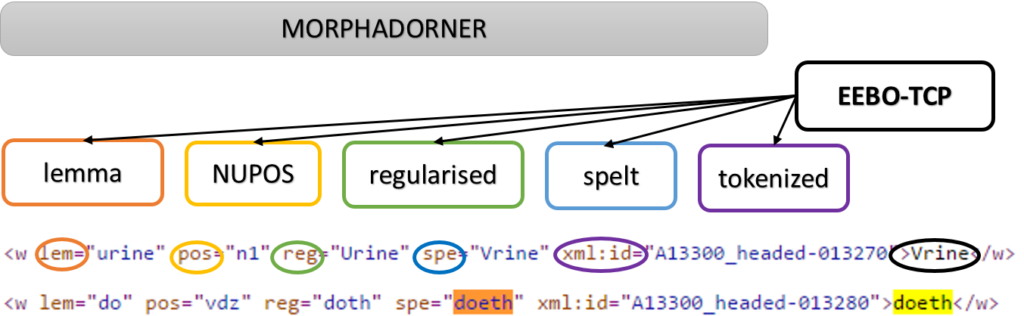

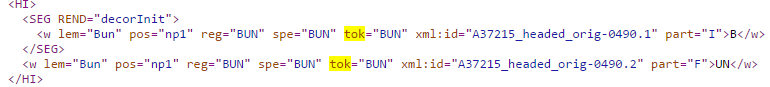

This post from August 2015 continues the comparison of VARD and MorphAdorner, tools for tackling spelling variation in early modern English. (See earlier posts here and here.) As of 2018, data on our public interface was prepared with an updated version of MorphAdorner and some additional curation from Martin Mueller at NorthWestern.

This week, we’ve replaced the default VARD set-up with a version designed to optimise the tools for VARD. In essence, this includes a lengthier set of rules to guide the changing of letters, and lists of words and variants that are more suited to the early modern corpus.

It is important to bear in mind that the best use of VARD involves someone ‘training’ it, supervising and to a large extent determining the correct substitutions. But because Linguistic DNA is tackling the whole EEBO-TCP corpus, and the mass of documents within it is far from homogenous, it is difficult to optimise it effectively.

Doth VARD recognise the second-person singular?

A first effort with the EEBO set-up was to review the understanding formed about how VARD works in relation to verb conjugations for the second and third persons singular. A custom text was written to test the output (using the 50% threshold for auto-normalisation as previously):

If he lieth thou liest. When she believeth thou leavest. If thou believest not, he leaveth. Where hast thou been? When hadst thou gone? Where hath he walked? Where goest thou? Where goeth he? What doth he? What doeth he? What dost thou? What doest thou? What ist? What arte doing?

Most of the forms were modernised just as described in the previous post. However, some of the output gave cause for concern. In the first sentence, “liest” became “least”. Further on “goest” became “goosed”, “doest” was accepted as a non-variant, while both “hast” and “dost” were highlighted as unresolved variants. This output can be explained by looking at the variant and word lists and the statistical measures VARD uses.

VARD’s use of variants and word frequencies

Scrutinising the word and variant lists within the EEBO set-up showed that although the variant list recorded “doest” as an instance of “dost”, “doest” and not “dost” appeared in the word list, overriding that variant. Similarly, “ha’st” appears in the variant list as a form of “hast”, but “hast” is not in the word list. It is not difficult to add items to the word list, but the discrepancies in the list contents are surprising. In fact, it might be more appropriate for VARD to record “doest” as a variant of “do”, and “ha’st” of “have”.

For “liest”, the correct variant and word entries are present so that “liest” can be amended to “lie”, giving a known variant [KV] recall score of 100% (indicating this is not a known variant form of any other word). However, the default parameters (regardless of the F-score) favour “least” because that amendment strongly satisfies the other three criteria: letter replacement [LR] (the rules), phonetic matching [PM], and edit distance [ED]. Until human judgment intervenes with the weighting, “least” has the better statistical case. (Much the same applies to “goest” and “goosed”.)

In VARD’s defence, one need only intervene with any of the “-st” verb endings in the text once (before triggering the auto-normalisation process) for the weighting to shift in favour of “liest”. VARD learns well.

Rewme: space, cold, or dominion?

One of the ‘authentic’ EEBO extracts we’ve been testing with is taken from a medical text, A rich store-house or treasury for the diseased, 1596 (TCP A13300). As mentioned in a previous post, employing VARD’s automated normalisation with the default 50% threshold, references to “Rewme” becomes “Room”. Looking again at what is happening beneath the surface, the first surprise is that there is an entry for “rewme” in the variant list, specifying it as a known variant of “room”. This is unsatisfying with regard to EEBO-TCP: a search of the corpus shows that the word form “rewme” appears in 89 texts. Viewing each instance through Anupam Basu’s keyword-in-context interface shows that in 84 texts, “rewme” is used with the meaning “rheum”. Of the other five texts, one is Middle English biblical exegesis (attributed to John Purvey); committed to print as late as 1550, the text repeatedly uses “rewme” with the sense “realm” or “kingdom” (both earthly and divine). The remaining four were printed after 1650 and are either political or historical in intent, similarly using “rewme” as a spelling of “realm”. Nowhere in EEBO-TCP does “rewme” appear with the sense “room”. However, removing it from the known variants (by setting its value to zero) and adding new variant entries for realm and rheum does not result in the desired auto-normalisation: The fact that both realm and rheum are candidates means their KV recall score is halved (50%). At the same time, the preset frequencies strengthen room’s position (309) compared with realm (80) and rheum (50). In fact, the word list accompanying the EEBO set-up seems still to be based on the BNC corpus—featuring robotic (OED 1928) and pulsar (OED 1968) with the same preset frequency as rheum.

So what does this mean for Linguistic DNA?

Again, it is possible to intervene with instances like rewme, whether through the training interface or by manipulating the frequencies. But it is evident that the scale of intervention required is considerable, and it is not obvious that telling VARD that rewme is rheum about 90% of the time that it occurs in EEBO-TCP and realm 10% of the time will have any impact in helping the auto-normalisation process to know when and where to distribute the forms in practice.

The frustrating thing is that the distribution is predictable: in a political text, it is normally “realm”; and in a medical text, it is “rheum”. But VARD seems to have no mechanism to recognise or respond to the contexts of discourse that would so quickly become visible with topic modelling. (Consider the clustering of the four humours in early modern medicine, for example.) I have a feeling this would be where SAMUELS and the Historical Thesaurus come in… if only SAMUELS didn’t rely on VARD’s prior intervention!

Wordcloud image created with Wordle.