What exactly does EEBO represent? Is it representative?

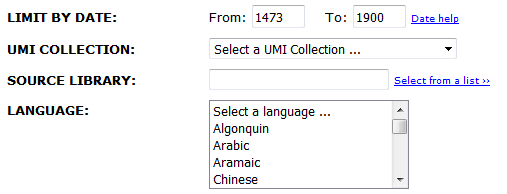

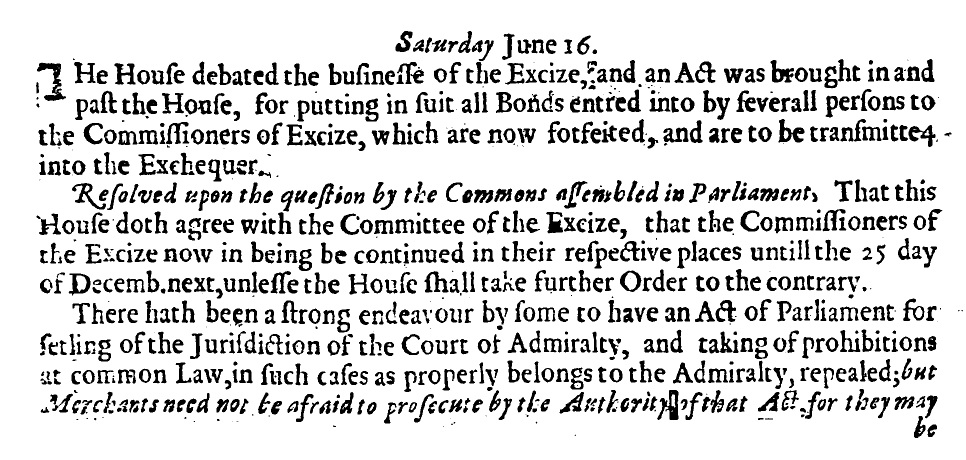

Often, the question of whether a corpus or data set is representative is answered first by describing what the corpus does and does not contain. What does EEBO contain? As Iona Hine has explained here, EEBO contains Early Modern English, but it is much larger than that in some ways, and also much more limited than that. EEBO contains many languages other than English, which were printed in the British Isles (and beyond) between 1476 and 1700. But EEBO is also limited: it contains only print, whereas Early Modern English was also hand-written and spoken, across a large number of varieties.

Given that EEBO contains Early Modern print, does EEBO represent Early Modern print? In order to address this question meaningfully, it’s crucial first to define representativeness.

In corpus linguistics, as in other data sciences and in statistics, representativeness is a relationship that holds between a sample and a population. A sample represents a larger population if the sample was obtained rigorously and systematically in relation to a well-defined population. If the sample is not representative in this way, it is an arbitrary sample or a convenience sample – i.e. it was not obtained rigorously and systematically in relation to a well-defined population. Representativeness allows us to examine the sample and then draw conclusions about the population. This is a fundamental element of inferential statistics, which is used in data science from epidemiology to corpus linguistics.

Was EEBO sampled systematically and rigorously in relation to a well-defined population? Not at all. EEBO was sampled arbitrarily, by convenience – first, including only texts that have (arbitrarily) survived; then including texts that were (arbitrarily) available for scanning and transcription; and, finally, including those texts that were (arbitrarily) of interest to scholars involved with EEBO at the time. Could we, perhaps, argue that EEBO represents Early Modern print that survived until the 21st century, was available for scanning and transcription, and (in many cases) was of interest to scholars involved with the project at the time? I think we would have to concede that EEBO wasn’t sampled systematically and rigorously in relation to that definition, and that the arbitrary elements of that population render it ill-defined.

So, what does EEBO represent? Nothing at all.

It’s difficult, therefore, to test research questions using inferential statistics. For example, we might be interested in asking: Do preferences for the near-synonyms civil, public, and civic change over time in Early Modern print? We can pursue such a question in a straightforward way, looking at frequencies of each word over time, in context, to see if there are changes in use, with each word rising or falling in frequency. In fact, we can quite reliably discern what happens to these preferences within EEBO. But our question, as stated, was about Early Modern print. It is the quantitative step from the sample (EEBO) to the population (Early Modern print) that is problematic. Suppose that we do find a shifting preference for each of these words over time. Because EEBO doesn’t represent the population of Early Modern print in any clear way, we can’t rely on statistics to conclude that that this is in fact a correlation between preferences and time – or if it is, instead, an artefact of the arbitrariness of the sampling. The observation might be due to any number of textual or sociolinguistic variables that were left undefined in our arbitrary sample – including variation in topics, or genres, or authorial style, or even authors’ gender, age, education, or geographic profile.

It as though we were testing children’s medication on an arbitrary group of people who happened to be walking past the hospital on a given day. That’s clearly a problem. We want to be sure that children’s medication was tested on children – but not simply children, because we also want to be sure that it isn’t tested on children arbitrarily sampled, for example, from an elite after-school athletics programme for 9-year-olds that happens to be adjacent to the hospital. We want the medication to be tested on a systematic cross-section of children, or on a group of children that we know is composed of more and less healthy kids across a defined age range, so that we can draw conclusions about all children, based on our sample. If we use a statistical analysis of EEBO (an arbitrary sample) to draw conclusions about Early Modern print (a population), it’s as though we’re using an arbitrary sample of available kids to prove that a medication is safe for the population of all kids. (Linguistics is a lot safer than epidemiology.)

If one were interested in reliably representing extant Early Modern print, one might design a representative sample in various ways. It would be possible to systematically identify genres or topics or even text lengths, and ensure that all were sampled. If we took on such a project, we might want to ensure sampling all genders, education levels, and so on (indeed, historical English corpora such as the Corpus of English Dialogues, or ARCHER, are systematically sampled in clear ways). We would need to take decisions about proportionality – if we’re interested in comparing the writing of men and women, for example, we might want large, equal samples of each group. But if we wanted proportional representation across the entire population of writers, we might include a majority of men, with a small proportion of women – reflecting the bias in Early Modern publishing. Or, we might go further and attempt to represent not the bias in Early Modern publication, but instead the bias in Early Modern reception, attempting to represent how many readers actually read women’s works compared to men’s works (though such metadata isn’t readily available, and obtaining it would be a project in itself). Each of these decisions might be appropriate for different purposes.

So, what are we to do? LDNA hasn’t thrown stats out the window, nor have we thrown EEBO out the window. But we are careful to remember that our statistics are describing EEBO rather than indicating conclusions about a broader population. And we haven’t stopped there – we will draw conclusions about Early Modern print, but not via statistics, and not simply via the sample that is EEBO. Instead, we will draw such conclusions as close readers, linguists, philologists, and historians. We will use qualitative tools and historical, social, cultural, political, and economic insights about Early Modern history, in systematic and rigorous ways. Our intention is to read texts and contexts, and to evaluate those contexts in relation to our own knowledge about history, society, and culture. In other words, we are taking a principled interpretive leap from EEBO to Early Modern print. That leap is necessary, because there’s no inherent representative connection between the two.