In 2016, Dr Kris Heylen (KU Leuven) spent a week in Sheffield as a HRI Visiting Fellow, demonstrating techniques for studying change in “lexical concepts” and encouraging the Linguistic DNA team to articulate the distinctive features of the “discursive concept”.

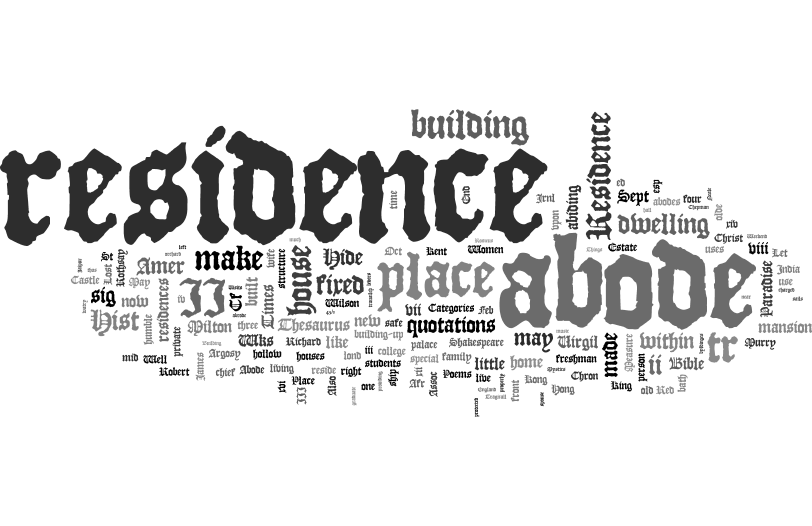

Earlier this month, the Linguistic DNA project hosted Dr Kris Heylen of KU Leuven as a visiting fellow (funded by the HRI Visiting European Fellow scheme). Kris is a member of the Quantitative Lexicology and Variational Linguistics (QLVL) research group at KU Leuven, which has conducted unique research into the significance of how words cooccur across different ‘windows’ of text (reported by Seth in an earlier blogpost). Within his role, Kris has had a particular focus on the value of visualisation as a means to explore cooccurrence data and it was this expertise from which the Linguistic DNA project wished to learn.

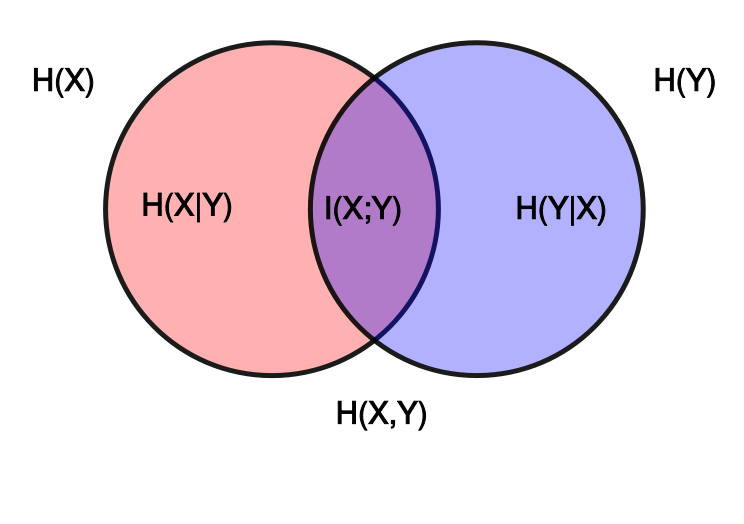

Kris and his colleagues have worked extensively on how concepts are expressed in language, with case studies in both Dutch and English, drawing on data from the 1990s and 2000s. This approach is broadly sympathetic to our work in Linguistic DNA, though we take an interest in a higher level of conceptual manifestation (“discursive concepts”), whereas the Leuven team are interested in so-called “lexical concepts”.

In an open lecture on Tracking Conceptual Change, Kris gave two examples of how the Leuven techniques (under the umbrella of “distributional semantics”) can be applied to show variation in language use, according to context (e.g. types of newspaper) and over time. A first case study explored the notion of a ‘person with an immigration background’ looking at how this was expressed in high and low brow Dutch-language newspapers in the period from 1999 to 2005. The investigation began with the word allochtoon, and identified (through vector analysis) migrant as the nearest synonym in use. Querying the newspaper data across time exposed the seasonality of media discourse about immigration (high in spring and autumn, low during parliamentary breaks or holidays). It was also possible to document a decrease in ‘market share’ of allochtoon compared with migrant, and—using hierarchical cluster analysis—to show how each term was distributed across different areas of discourse (comparing discussion of legal and labour-market issues, for example). A second comparison examined adjectives of ‘positive evaluation’, using the Corpus of Historical American English (COHA, 1860-present). Organising each year’s data as a scatter plot in semantic space, the path of an adjective could be traced in relation to others—moving closer to or apart from similar words. The path of terrific from ‘frightening’ to ‘great’ provided a vivid example of change through the 1950s and 1960s.

During his visit, Kris explored some of the first outputs from the Linguistic DNA processor, material printed in the British Isles (or in English) in two years, 1649 and 1699, transcribed for the Text Creation Partnership, and further processed with the MorphAdorner tool developed by Martin Mueller and Philip Burns at NorthWestern. Having run this through additional processes developed at Leuven, Kris led a workshop for Sheffield postgraduate and early career researchers and members of the LDNA team in which we learned different techniques for visualising the distribution of heretics and schismatics in the seventeenth-century.

The lecture audience and workshop participants were drawn from fields including English Literature, History, Computer Science, East Asian Studies, and the School of Languages and Cultures. Prompted partly by the distribution of the Linguistic DNA team (located in Sussex and Glasgow as well as Sheffield), both lecture and workshop were livestreamed over the internet, extending our audiences to Birmingham, Bradford, and Cambridge. We’re exceedingly grateful for the technical support that made this possible.

Time was also set aside to discuss the potential for future collaboration with Kris and others at Leuven, including participation of the QLVL team in LDNA’s next methodological workshop (University of Sussex, September 2016) and other opportunities to build on our complementary fields of expertise.