Conference reflections jointly written with Justyna Robinson

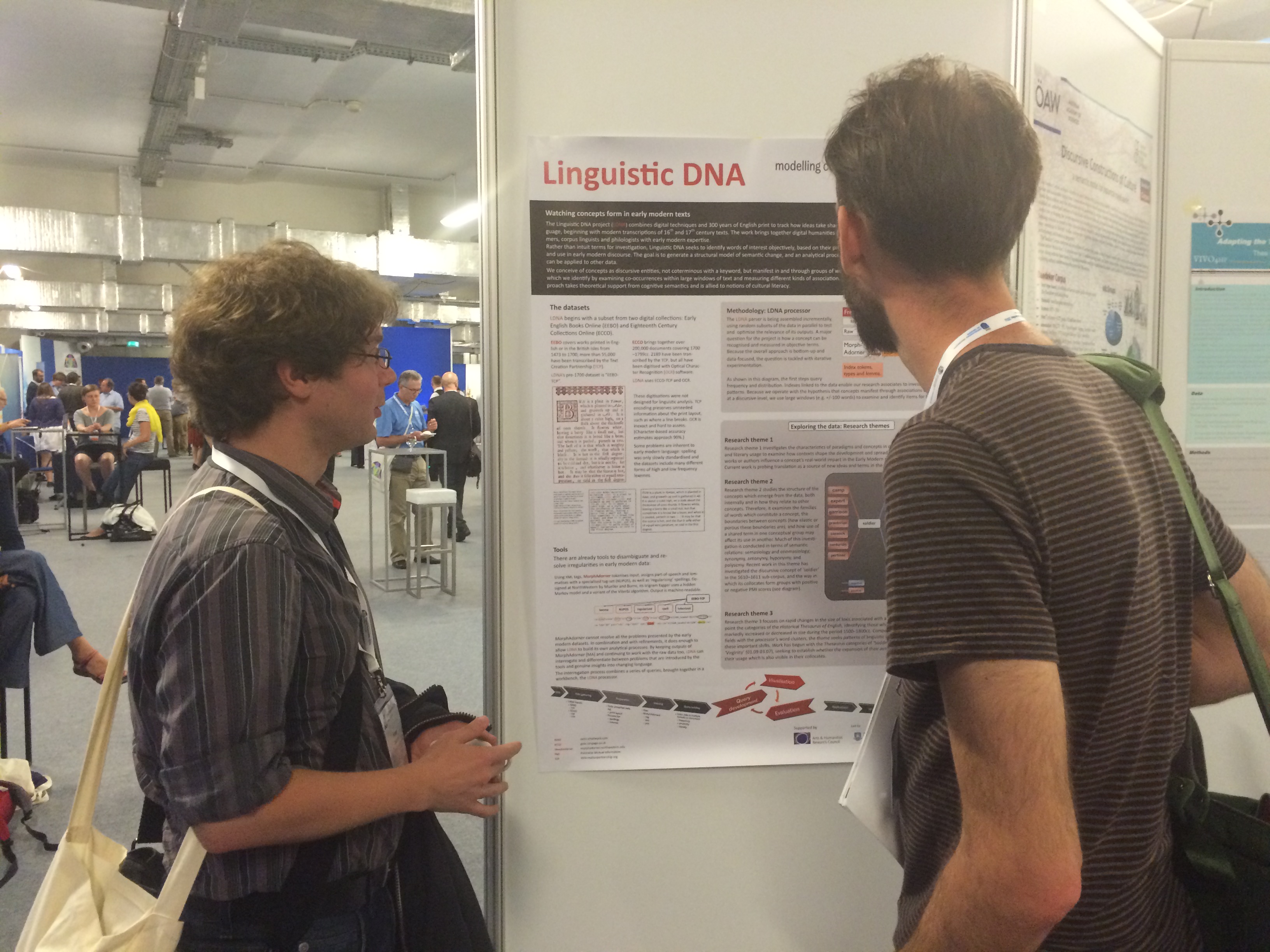

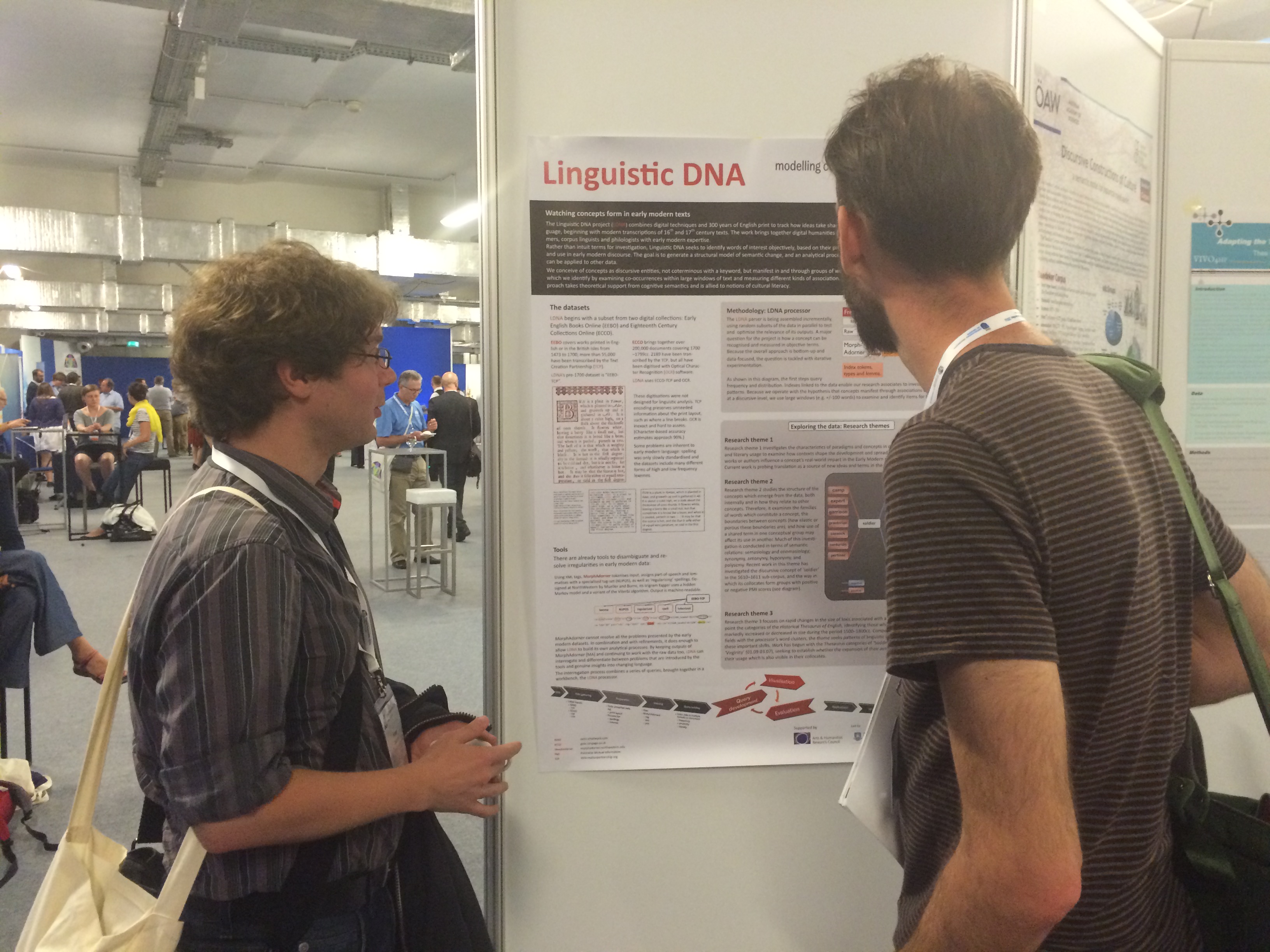

Four members of the LDNA team—Marc Alexander, Justyna Robinson, Brian Aitken, and Fraser Dallachy—attended this year’s Digital Humanities (DH) conference in Kraków, Poland. With over 800 attendees, the conference is an excellent opportunity to exchange ideas, learn of new areas of potential interest, and network with academics from around the world. The team presented a version of the project’s poster at the event (attached to this post), giving an overview of the project, the technical steps which have been taken so far, and introducing the research themes.

Digital methods of textual analysis are an important subject for the DH attendees, and there were several papers outlining approaches and results from such research. One of the most relevant of these for us was the paper by Glenn Roe et al. on identification of re-used text in Eighteenth Century Collections Online (ECCO). After eliminating re-printings of texts, this project used a specially developed tool which found repeated passages, indicating where an author had re-used their own or another’s words. The results are available and searchable on their website. In the same session, a team led by Monica Berti at Leipzig described a method of identifying and labelling fragments of text quoted from ancient Greek authors. These projects represent something like a parallel research track to ours, tracing the history of ideas through replication of passages rather than through more abstract word clusters. Early English Books Online (EEBO) also received some attention, with Daniel James Powell giving an overview of its history and importance to digital research on historical texts.

Discussion with other attendees at the poster session was especially productive, and resulted in several strong leads for the team to follow up. A subject which was mentioned to us repeatedly was that of topic modelling. Multiple panels were dedicated to the use of these methods to extract information about the contents of texts, an approach which LDNA has considered employing. The team at Saarland studying the Royal Society Corpus (with whom LDNA is already in contact) use topic modelling to study the development of scientific concepts and terminology. Their results were encouraging, allowing them to identify word groupings which represent scientific disciplines such as physiology, mechanical engineering, and metallurgy. Following these topics through time showed that the number of topics increases whilst their vocabulary becomes more specialised. Although LDNA has reservations about how useful topic modelling is for our purposes, the work being conducted at Saarland refines and implements its methodology in a way which we would seek to learn from if we do choose to pursue it further.

At the poster session

Visualising big data is of central interest to the LDNA project, especially in the context of the upcoming LDNA Visualisation Workshop. With this view in mind, we paid particular attention to projects that presented new and interesting ways of seeing large data. A number of presentations focused on network visualisations. These often link metadata, e.g. around social networks of royal societies or academies as based on letter correspondence. An interesting visualisation that present unstructured linguistic data was presented by the EPFL team. Vincent Buntinx, Cyril Bornet, and Frédéric Kaplan visualised lexical usage in 200 years of newspapers on a circle with the radial dimension representing the number of years a word has been in use, and the circumferential dimension showing a period of use of words. [1]

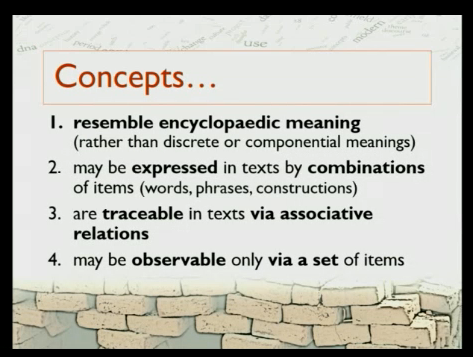

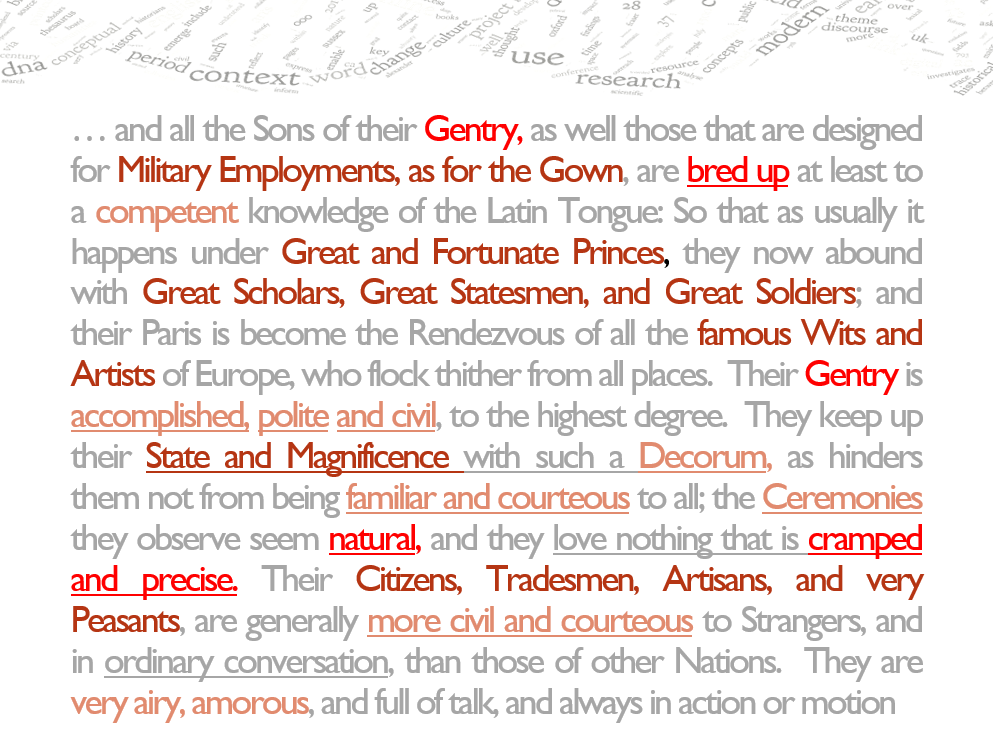

Stylometrics, with its interest in being able to identify and measure aspects of language which contribute to the impression of authorial style, produced some interesting papers. One of the common themes for stylometrics and other DH strands of research is the way concepts are operationalised. The varied approaches to concepts taken by DH researchers were noticeable, for example, whether each noun can be considered to be a concept, or a concept can be defined as “a functional thing”. This suggests that the work on concept identification undertaken by the LDNA team will be of interest to the wider DH community. Also amongst the stylometric papers was a look at historical language change by Maciej Eder and Rafal Górski which used bootstrap consensus network analysis on part of speech (POS) tagged texts to contrast syntax and sentence structure between time periods. The paper used multidimensional scaling (MDS) to reduce POS tagged texts to a single value which could then be plotted against time, allowing them to show that a gradual change in the MDS results can be discerned between the earliest and latest texts. The paper both highlighted how useful a visualisation can be for identifying a change, and how difficult it can be to quantify exactly what the visualisation shows.

However, on a different but very important note, a strong theme of the conference was that of diversity, with a thread of panels discussing the different ways in which this subject is applicable to the digital humanities. From a personal point of view, I think LDNA has a strong awareness of both the scope and the limitations of our interests and approaches, (although we can never afford to be complacent). We’ve considered what our textual resources represent, and the RAs are soon to explore this subject from different angles in future blog posts. EEBO and other text collections are more expansive, inclusive, and diverse than prior research has been able to access, and this feels like a part of an enormously positive movement in academia to open up more and more data for new kinds of study. As extensive as our resources are, however, they still have limitations reflecting the (mostly Western, mostly white, mostly male, mostly middle-to-upper class) societal groups who were able to read, write, and print the words which ended up in these collections. The resources open to academia are continually growing, and hopefully this expanding diversity will open up ever more of the world’s knowledge to ever more of its population. Whilst the discussions at this conference have made clear that there is a long way to go in fully embracing diversity in the digital humanities, there are indications that the situation is improving, and it is incumbent upon us all to ensure that this continues.

For another view of the conference, Brian Aitken, Digital Humanities Research Officer at Glasgow, has written about his own experience on his blog.

———

1. Studying Linguistic Changes on 200 Years of Newspapers, Vincent Buntinx, Cyril Bornet, Frédéric Kaplan (EPFL (École polytechnique fédérale de Lausanne), Switzerland)